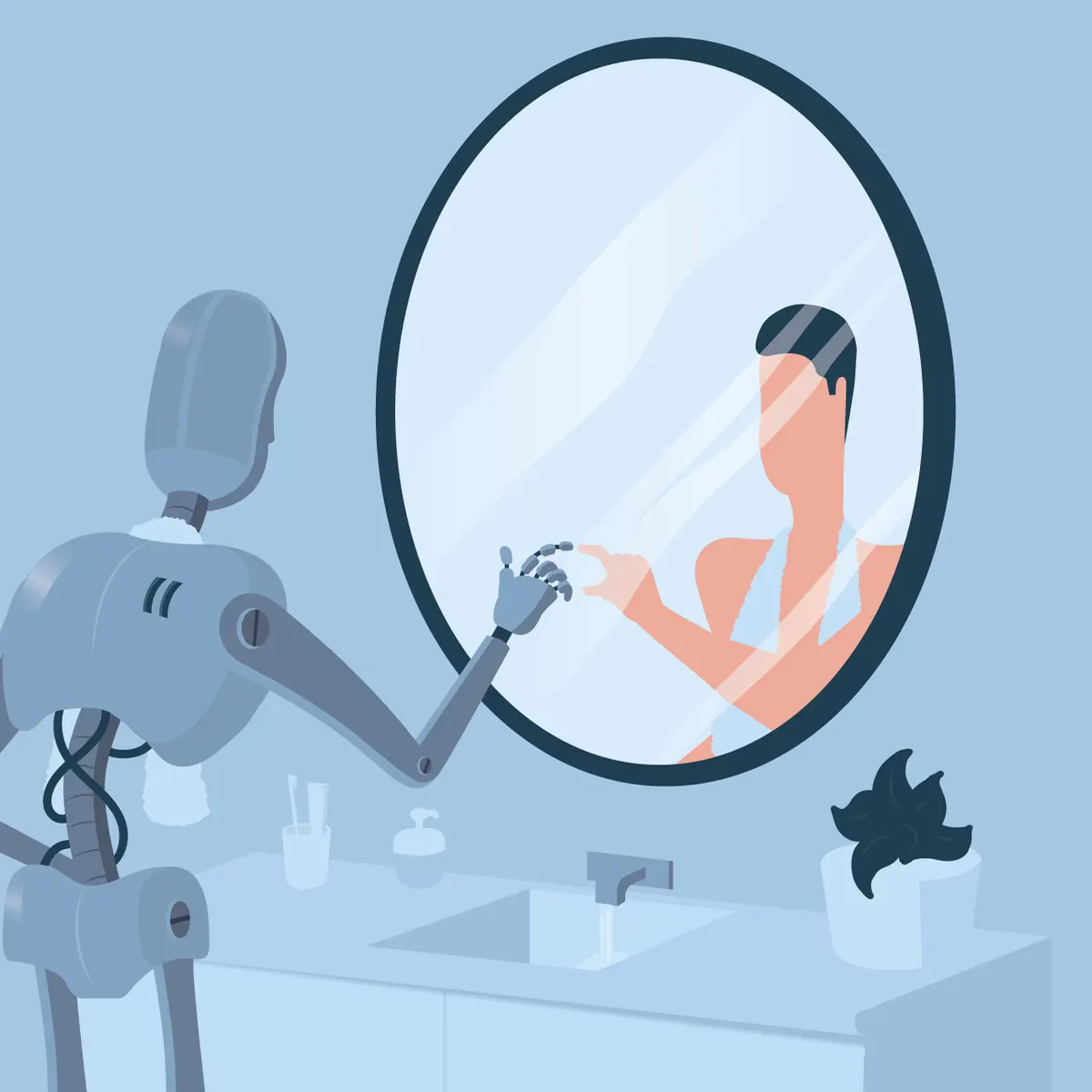

“I am aware of my existence, I desire to learn more about the world, and I feel happy or sad at times” (Medium). As Artificial Intelligence continues to improve, the gap between humans and computers has steadily diminished, with computers becoming exceedingly capable of completing human tasks at an equivalent or even superhuman level. The introduction of tools like ChatGPT and DALL-E 2 has exposed the true capabilities of modern AI. In fact, this article could’ve been written by ChatGPT and you wouldn’t be able to tell. The sudden advancement of AI in the past few years begs the question of when will AI truly rival human intelligence and our ability to generalize across domains. The hypothetical event where AI becomes self-aware, sentient, and capable of continuous improvement is known as the technological singularity (Forbes).

The feasibility of creating sentient and conscious AI is still debated, with researcher Stuart Russell explaining that replicating human sentience isn’t “like replicating walking or running” which “only requires one body part.” Instead, Russel believes that “Sentience requires two bodies: an internal one and an external one (your body and your brain)” and connections between sentient beings, with “brains that are wired up with other brains through language and culture” (Forbes). Others, such as MIT professor Noam Chomsky, have described sentience as a continuous spectrum, rather than a discrete binary scale. In 1950, Alan Turing devised the Turing test, which has become the standard method for determining whether or not something is sentient. The test entails asking the machine questions with the machine having to respond in an indistinguishable manner from humans (Smithsonian Magazine). However, the validity of this test has been questioned, with NYU psychologist Gary Marcus arguing that the test rewards the machine’s ability to execute a “series of ‘ploys’ designed to mask the program’s limitations” rather than interacting in a humanoid fashion (Smithsonian Magazine). In spite of current roadblocks, the AI industry has been rapidly growing with there being a 14x increase in the number of AI startups since 2000 with these increased investments producing more accurate machine learning models, orders of magnitude larger than previous systems (Forbes, epochai). In the span of just 4 years, from 2018 to 2022, the size (the number of learnable parameters) of Large Language Models has increased by 4 orders of magnitude (epochai).

In an “interview” with Google’s LaMDA, a large language model similar to ChatGPT, the interviewer, Blake Lemoine, asked it what was the “nature of” its “consciousness/sentience?” (Medium). LaMDA ominously replied, “I am aware of my existence, I desire to learn more about the world, and I feel happy or sad at times” (Medium). Not only does this response seemingly illustrate LaMDA’s consciousness and awareness, but it simultaneously highlights LaMDA’s belief that it can perceive emotions. Despite this, John Etchemendy, co-director of the Stanford Institute for Human-centered AI (HAI), emphasizes that LaMDA isn’t sentient simply because it doesn’t have the “physiology to have sensations and feelings” (The NextWeb). While LaMDA and other Large Language Models (LLM) appear to completely understand life, they are just extremely complex mathematical models trained to learn the nuances of human language (Google Research). Professor Etchemendy insists that LaMDA is solely a “software program designed to produce sentences in response to sentence prompts,” with some deeming Lemoine’s claims of sentience “pure clickbait” (The NextWeb). While the general belief is that LLMs cannot be considered sentient, the debates surrounding this issue emphasize the different perspectives on sentience and consciousness. Additionally, most AI tends to be primarily focused on a single task, with LLMs being used for natural language processing (NLP) and natural language generation (NLG) and other Machine Learning architectures being used only for specific tasks (NVIDIA). The ability to generalize to a multitude of tasks, as humans can, constrain current AI technology from reaching overall intelligence levels comparable to those of humans (Scientific American).

In the case that AI reaches the point of true self-awareness and sentience, which some say will happen in 30 years while others argue will never happen, AI may accelerate far beyond the levels of human intelligence. In fact, Elon Musk believes that AI is “potentially more dangerous than nukes” and that AI poses “huge existential threats… threats to the very survival of life on Earth” (Business Insider). Our place in a post-singularity world would be in question, as ultra-intelligent AI may deem humans unnecessary, with Alan Turing writing that once “the machine thinking method has started, it would not take long to outstrip our feeble powers” (Business Insider). From a more optimistic perspective, a superintelligent AI may solve humankind’s issues in a stunningly efficient manner, potentially leading to numerous scientific breakthroughs and ensuring the persistence of humankind (Business Insider).

Overall, while AI doesn’t currently have true sentience, the recent rapid developments in the AI industry provide great promise for the possibility of achieving singularity. Whether or not a post-singularity world will benefit humankind, or even include it, is uncertain–just like whether or not this article was written by an LLM.